2023-11-13 - Zone based skyboxes

New week, and new tasks from our TODO list from last Friday.

allow different backgrounds for each zone (skyboxes, scenes, video passthrough)

improve architecture (even better logic/visualization separation)

reuse platforms instead of instantiating/destroying them (use a pool)

Let's start from the skyboxes.

If you're not familiar with the concept, skyboxes are a decades old computer graphics trick to provide a background to a 3D render. To render a skybox you can use a special kind of texture or, as in our case with the skybox material provided by Unity, you can have it procedurally generated from some parameters (e.g. : sky color, ground color...).

At this stage, I don't care much about the look or quality of the skyboxes, I just want to emphasize that the bridge portals bring you to a different place, and changing the background should be an effective way to do that.

I defined three different skybox material, duplicating the original one and changing the colors.

I renamed the original one as `mat_skybox_corridorZone` and named the two new ones `mat_skybox_leftTriZone` and `mat_skybox_rightTriZone`. Instead of the cyan/magenta colors of the original one, I picked a blue/orange pair for `leftTriZone`, and flipped it to orange/blue for `rightTriZone`.

I added these materials to the (very temporary) resource manager of the game, and added a method to fetch them by name.

Then, I added a `Skybox` component to the cameras, so that thay can render as background a specific skybox and not the global one that Unity lets you define in the environment lighting window.

With these preliminary operations done, I went and added

a new field to the `

ZoneDesc` structure, adding a string identifier to identify the material to use for that zone skybox.a new field to the `

Zone` class, to hold a reference to the actual material, fetched from the resource manager when instantiating a zone from a zone description

I manually edited the JSON files of the three test zones so that each one of them refers to the proper material, and as last thing, I went and modified again `PortalRendererBhv`:

private void adjustSkyboxes() {

Material rMainCamSkyboxMat =

ZoneManagerBhv.i().getCurrZone().getSkyboxMat();

Material rPortalCamSkyboxMat =

PortalManagerBhv.i().isBridgePortal() ?

ZoneManagerBhv.i().getAdjZone().getSkyboxMat() :

rMainCamSkyboxMat;

m_rMainCamSkybox.material = rMainCamSkyboxMat;

if (Camera.main.stereoEnabled) {

m_rPortalCamRSkybox.material = rPortalCamSkyboxMat;

m_rPortalCamLSkybox.material = rPortalCamSkyboxMat;

} else {

m_rPortalCamMonoSkybox.material = rPortalCamSkyboxMat;

}

}Everything worked as expected on the first attempt. Isn't it nice when it happens?

Here's a short gameplay clip showing the new feature.

Soon I'll probably try and use the mixed reality passthrough as skybox.

2023-11-14 - Startup configuration

I'm going to work on the initialization logic.

Usually, a VR headset SDK exposes some information about the room where the device is being used.

That information can then be used by the application, to various degrees of sophistication.

What I want to do is pretty simple: I want to position the hexagonal platform that defines our play area roughly at the center of the available space, properly rotated.

I realize this can feel as something non critical, that could be postponed to a late "polishing" phase of the development, but it makes a lot of sense to do it now.

Why? Well, because it will save me a few seconds for each of the (thousands) of times that I will start a development build. Now, I usually find the hexagonal platform at different locations in my working space, (depending on where I am when I start the application, I think), and I have to recenter properly, which sometimes takes more than one attempt.

After implementing this feature, instead, I should never need to recenter again and the platform will be in the optimal position for any tracked space.

So, time to check the Meta SDK documentation for a bit.

Reading the docs, it looks like there is an API call which returns the the "maximum" axis aligned rectangle contained in the irregular boundary defined when using the headset in a physical environment. Which is exactly what I needed: in geometric terms, the problem to solve is centering and rotating the platform hexagon inside this "play area rectangle".

Centering it is trivial: the hexagon center must be at the center of the rectangle.

How to rotate it is also quite obvious: a flat top hexagon has width greater than height, so the height should be aligned to the shorter side of the rectangle..

To make sure the idea is clear, I'm adding a simple image showing an irregular boundary border, the axis-aligned rectangular area contained in such border, and how we want to place the hexagon.

Currently, our platform radius is set to `1.2f`, so to fit completely the hexagon platform we would need a play area of:

* `width = 2 * radius = 2.40`

* `height = sqrt(3) * width = 2.08`

This makes me wonder if I should resize the platform setting the radius to 1m, because if I remember correctly the expected room scale size minimum is 2x2 m. Requiring 2.40m might be too much. I'm going to think about it. But not now. Now, it's time to try the API calls.

As I found out when testing, the API calls to fetch the corners and the size of the play area don't work properly when using the headset connected to the PC (via Oculus Link). So, I had to test making builds and uploading them to my Quest, which took a bit of time.

I added to the scene four small blue spheres to be positioned at the corners of the play area (fetched with the `GetGeometry()` API call).

Then, to check the dimensions values (fetched with `GetDimensions()`) I added a debug panel where I can easily set some text at runtime. More convenient than checking the Android logging messages, at least in this scenario.

I then used this snippet to position the spheres and set the text on the panel:

private void Update() {

if (!OVRManager.OVRManagerinitialized) return;

var rBoundary = OVRManager.boundary;

if (rBoundary.GetConfigured()) {

Vector3[] vGeometry = rBoundary.GetGeometry(

OVRBoundary.BoundaryType.PlayArea

);

for (int i =0; i < 4; ++i) {

m_rCornersSpheres[i].transform.position = vGeometry[i];

}

Vector3 vDimensions = rBoundary.GetDimensions(

OVRBoundary.BoundaryType.PlayArea

);

string sDebugText = string.Format(

"Play Area size: {0:0.00}x{1:0.00}",

vDimensions.x, vDimensions.z

);

DebugManagerBhv.i().setText(sDebugText);

} else {

string sDebugText = "Play Area not configured";

DebugManagerBhv.i().setText(sDebugText);

}

}You can see the result in the following short video.

Notice that, when recentering, the four spheres stay in place, as expected.

I also forced the guardian to appear to check that, indeed, it was passing through the spheres (so they were in the right position).

Now that I have the four points, calculating the the position and rotation offset that I want for the hexagonal platform is easy. What is not obvious is: is there a way to just reset the Unity world center and world orientation to that "calculated origin" at runtime? Or will I need to manage that additional "root transformation" myself?

It wouldn't be a huge deal (I could adjust on the basis of this "calibration" a "worldRoot" node and place everything under that, using local coordinates), but it would feel cleaner to just reset the origin.

It's getting late, so I'm going to find out tomorrow what's the best course of action.

2023-11-15 - Finding the hexagon pose

So, yesterday I wrote that finding the optimal hexagon position and orientation inside the play area rectangle was easy. Time to do it.

The center position of the rectangle can by calculated as average of the coordinate of its corners. So we can sum the four `Vector3` obtained from `GetGeometry()` and divide the result by `4`.

For the orientation, we explained that we want to align the hexagon height to the smaller side of the rectangle. So, we check which one of the two is shorter, and use the `Vector3` representing that side to calculate our `Quaternion`.

Let's see the code:

Pose calcCenterPose(Vector3[] rCorners) {

Vector3 vPos = Vector3.zero;

for (int i = 0; i < 4; ++i) {

vPos += rCorners[i];

}

vPos = vPos / 4f;

Vector3 vSideA = rCorners[1] - rCorners[0];

Vector3 vSideB = rCorners[2] - rCorners[1];

Vector3 vShortSide =

Vector3.SqrMagnitude(vSideA) < Vector3.SqrMagnitude(vSideB) ?

vSideA : vSideB;

Quaternion qRot = Quaternion.LookRotation(vShortSide, Vector3.up);

return new Pose(vPos, qRot);

}Note that we assume that `rCorners` has length `4` (which is something I checked in a revised version of yesterday's code, not worth showing).

Also note that I used `Vector3.SqrMagnitude()` and not `Vector3.Magnitude()`: I only need to know which side is shorter, not their length (that I know from the other API call, `GetDimensions()`, anyway). Not that we are thinking much about performances, but it's always good to save a square root computation, if it's not needed.

To finish this other little step, I cleaned up a bit the code related to the visual debugging elements I added yesterday. Now, the four spheres at the corners of the play area only appear in debug mode.

Additionally, I added a fancy debug element to see the sides of the rectangle, with the length written on top. I also added an arrow to see the `Pose` calculated by `calcCenterPose()`, our "new" result of the day.

Here's a short clip showing the changes.

Basically, as you can see in the second part of the video, by walking to the yellow arrow and looking along its direction, and then using the recenter command from the Quest UI, I get the kind of setup that I want to be automatically calculated by the application. Of course, I can't be very precise this way, but it roughly works.

The next step is having it done automatically, so that players will never need to recenter.

In an ideal world, this would be just an API call away (something like `RecenterTo(Pose p)`). But if my preliminary search was correct, it's not going to be that easy. When ever is it?

2023-11-16 - Entering the stage (mode)

Yesterday we reached the point where we have the `Pose` that we want as our world origin and now we have to understand how to actually set it, and make it so the user-requested recentering does nothing (or, even better, is unavailable).

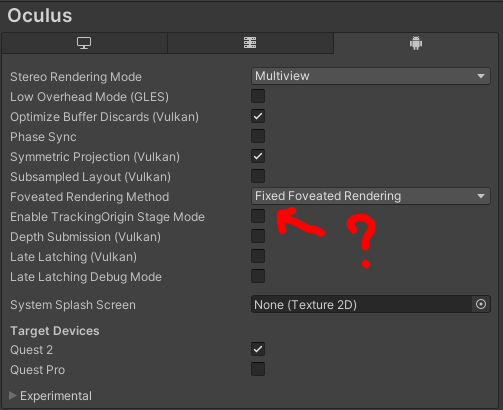

After a bit of googling and reading the SDK documentation, I went and changed a couple of settings in the `OVRManager` script attached to the `OVRCameraRig`.

I changed `Tracking Origin Type` from `Floor` to `Stage`, and I unchecked `Allow Recenter`.

From the documentation:

Floor Level tracks the position and orientation relative to the floor, whose height is decided through boundary setup. Stage also tracks the position and orientation relative to the floor. On Quest, the Stage tracking origin will not directly respond to user recentering.

and:

Allow Recenter: Select this option to reset the pose when the user clicks the Reset View option from the universal menu. You should select this option for apps with a stationary position in the virtual world and allow the Reset View option to place the user back to a predefined location (such as a cockpit seat). Do not select this option if you have a locomotion system because resetting the view effectively teleports the user to potentially invalid locations.

If you find this a bit confusing (there's a combination of settings which, as far as I understand, should not be accepted by the UI: `Stage` and `Allow Recenter`), well, I'm sorry: there's another setting too related to this matter. It's not here on the `OVRManager`, but in the `Oculus` settings of the `XR Plug-in management`:

The documentation for this setting is:

Enable TrackingOrigin Stage Mode - When enabled, if the Tracking Origin Mode is set to Floor, the tracking origin won't change with a system recenter.

Honestly, I don't understand the purpose of this setting - maybe it's from before you could select `Stage` in `OVRManager` as `Tracking Origin Type`, and is now useless?

Anyway, let's move on.

I tried a build with the new settings, and the experienced behaviour is interesting. The recenter action, as expected, does nothing. Still, I think that showing it greyed out in the system menu would be better for the user, making it clear that that functionality is not available. This way, it looks like something is not working: you request the recentering from the UI, and nothing happens.

With the `stage` setting, the world origin (and, so, the center of the hexagon platform) is automatically placed to the center of the play area, which is what I wanted.

Unfortunately, I haven't seen any consistency about how the X and Z axis are matched to the two sides of the play area rectangle. If there was a consistent rule aligning a specific axis to the shorter side, I could have used that information to rotate the hexagon platforms accordingly. But changing the boundary setup, I have observed that sometimes the short side is aligned to the hexagon height, and sometimes it is aligned to the hexagon width.

Let's see two screenshots, before and after a reconfiguration of the play area boundary:

Here, I had a 1.83x1.38 (didn't do that on purpose, I swear) play area, and the rotation is correct: the hexagon height is aligned to the short side of the rectangle.

In the same game execution, changing the play area (forcing an execution of the guardian setup) I got instead this: a 2.26x1.73 play area, but the height of the hexagon is aligned to the 2.26m side (the longer). The arrow at the center of the platform, which should always be aligned to the height, is aligned to the width.

As I wrote yesterday, I always want the hexagon height on the direction of the short side, so that's not good enough. Back to the drawing board (and to Google).

Unfortunately, my search was unfruitful.

I understood that the `Stage` setting is inherited by the OpenXR spec, which says

`

XR_REFERENCE_SPACE_TYPE_STAGE`. The `STAGE` reference space is a runtime-defined flat, rectangular space that is empty and can be walked around on. The origin is on the floor at the center of the rectangle, with +Y up, and the X and Z axes aligned with the rectangle edges. The runtime may not be able to locate spaces relative to the `STAGE` reference space if the user has not yet defined one within the runtime-specific UI. Applications can use xrGetReferenceSpaceBoundsRect to determine the extents of the `STAGE` reference space’s XZ bounds rectangle, if defined.

So, yes, the axis are aligned to the rectangle edges, and you can fetch the extents of the rectangle, but there's no rule specifying that, for example, "the X axis is always aligned to the shorter side of the rectangle, the Z axis to the longer". Am I the only one thinking it would be useful?

Anyway, it is what it is.

I also found other bad news: it looks like the `Stage` mode, at least in the past, didn't work properly when the headset goes to sleep mode and then back into operation.

What else did I find? A lot of outdated hacks, complaints about problems similar to mine, and things that are very close to the solution but aren't the solution.

For example, it's very annoying that there IS an API call to request a recenter to the current headset pose (`OVRManager.display.RecenterPose()`), but that's it: you can't recenter to another, specific pose.

If only that same API had an optional parameter allowing to pass in a pose... if only.

Well, yet another day of game development, I guess!

I have wasted enough time trying to find a solution offered by the platform, it's now time to fix it at the application layer, which could also prove useful in the long term (porting to other engines or SDKs).

2023-11-17- The origin of the world

Conceptually speaking, what I'm going to do is pretty simple.

We already put all the platforms of a zone under a "zone parent" node.

That's mostly to keep the scene tidy, and to be able to easily move the zones when the player moves from a zone to another. That's something I did because I wanted to always have the currently loaded zones near the origin. Specifically, the "current" zone must always have its pivot at the origin of the world.

Now, we must take control of that "origin of the world". It won't just be `Vector3.zero` and `Quaternion.identity`, but a `Pose` derived by the play area configuration.

The most straightforward way to do this is adding a `gameWorld` node at the root level of the scene, and adjusting the zone instantiation so that each "zone parent" is a child of `gameWorld`.

Then, we can control the `gameWorld` transform on the basis of the play area calibration (basically, setting its position and rotation to the ones returned by `RealityManagerBhv.i().getPose()`).

Adjusting `gameWorld`, of course, will adjust all its children accordingly.

So that's it? Basically, yes, but from now on I must be careful to not mess with global position and global rotation of nodes: if the current zone always started at the origin as I wanted, a local transform in the zone would have matched the world transform. Which can sometimes be convenient.

Now, instead, we will be forced to always work with transforms relative to the `gameWorld` node.

In a sense, having this restriction might make the code more robust and portable. See? The glass is half full.

I did exactly what I wrote, and adjusted the `Zone` and `Platform` instantiation methods so that they properly use local transforms.

After a bit of fiddling, everything worked as expected.

To easily test the behaviour in the Unity Editor, without having to build and change guardian setup in the headset, I added an `#ifdef` so that, when running in editor, `RealityManagerBhv` fetches dimensions and geometry of the "play area" from a `TestPlayAreaBhv` script and not from the Oculus API calls.

It's not particularly interesting, but here's the script:

using UnityEngine;

namespace BinaryCharm.ParticularReality.TestManagement {

public class TestPlayAreaBhv : MonoBehaviour {

[Range(0.5f, 5f)]

[SerializeField]

private float m_fWidth = 2.5f;

[Range(0.5f, 5f)]

[SerializeField]

private float m_fHeight = 1f;

private Vector3[] m_rCorners;

void Awake() {

m_rCorners = new Vector3[4];

}

private void Update() {

float fHalfWidth = m_fWidth / 2f;

float fHalfHeight = m_fHeight / 2f;

Vector3 vCenterOffset = transform.position;

Quaternion vRot = transform.rotation;

m_rCorners[0] = vRot *

(vCenterOffset + new Vector3(fHalfWidth, 0f, -fHalfHeight));

m_rCorners[1] = vRot *

(vCenterOffset + new Vector3(fHalfWidth, 0f, fHalfHeight));

m_rCorners[2] = vRot *

(vCenterOffset + new Vector3(-fHalfWidth, 0f, fHalfHeight));

m_rCorners[3] = vRot *

(vCenterOffset + new Vector3(-fHalfWidth, 0f, -fHalfHeight));

}

public Vector2 GetDimensions() {

return new Vector2(m_fWidth, m_fHeight);

}

public Vector3[] GetGeometry() {

return m_rCorners;

}

}

}As you can see in the following video,

I can adjust position and Y rotation of the test node, and the world follows (this should not be needed if we stick to the `Stage` as "tracking origin type", but is instead crucial if for some reason (like, the problem with headset "sleep" I read about) we want to go back to `Floor`.

when I change the play area dimensions so that the shorter/longer sides swap, the world gets reoriented accordingly, so that the hexagon height always stays aligned to the shorter side

If you watched carefully, you will have spotted a bug: the cyan "portal indicator", which is currently handled separately and not a part of the platforms, does not get rotated correctly.

I'm also not considering enemies and pickups, that already needed some reworking after introducing the loading of other zones.

Next week I'll start by fixing the indicators (probably refactoring their handling, too), do another bit of cleanup, and hopefully wrap this up and move forward.