Advanced Prototyping - Week #16

Motion capture and playback

2024-03-11 - Capture data, and when to save it

This week I have a bit of extra work to take care of, so I'm going to have less time available for Particular Reality. Hopefully I can manage to throw in a couple of hours every day anyway, and take off something from the list of tasks I discussed at the end of week #15.

I'm going to prioritize writing code for the project, so the DevLog entry might end up less detailed than usual.

I started defining an `AnimClip<H, F>` generic data type that will store a motion capture.

`H` is going to be the header data type, and `F` the frame data type.

So, I'll have a place to store non-repeating information about the clip (the header), and then an array of frames each following the same structure.

I could have defined a specific `BodyAnimClip` class and avoid the generics, but it really doesn't add much complexity and feels a bit cleaner: I have a clear-cut separation between the high level operations dealing with clips, and the definitions of the data contained in such clips.

The first important question about the definition of a clip is: how does the capture happen?

In the past, I've handled this kind of thing by capturing frames at a fixed time interval (storing the capture frequency in the clip), or at variable interval (storing a timestamp for each frame).

Both approaches have drawbacks.

The fixed time interval capture can get jittery, because the delay between each capture ends up being not exactly the same.

The variable time interval produces a better capture, but the clips with variable frame rate are harder to manage (you can't easily seek into the clip, or cut a slice of it).

Last time I did something like this, I used the variable time clips and added a kind of index of "key frames" every second. I had an instant lookup to the key frames, by second, and then I proceeded linearly scanning the frames nearby, knowing that the scan would be just for a few entries.

To have a smooth animation, interpolation is basically mandatory: using a very simple linear interpolation (spherical for quaternions) usually works well, as long as the sampling frequency is sufficient.

So, if the playback needs a frame for time 0.127, and I have a frame A at 0.100 and the following one, B, at 0.200, I'm going to interpolate between these two frames according to where the required time falls between them.

float fInterpFactor = Mathf.InverseLerp(0.100, 0.200, 0.127); // 0.27

Vector3 vInterpVec = Vector3.Lerp(A.vec, B.vec, fInterpFactor);

Quaternion qInterpQuat = Quaternion.Slerp(A.rot, B.rot, fInterpFactor);Anyway, this time I'd like to have the best of both worlds: "raw" clips with timestamped frames, and processed clips, derived from the raw ones by interpolation, straightforward to play and edit.

Tomorrow I'll try to get the basics implemented.

2024-03-12 - Animation clips

Today I basically sketched out the implementation of what I discussed yesterday.

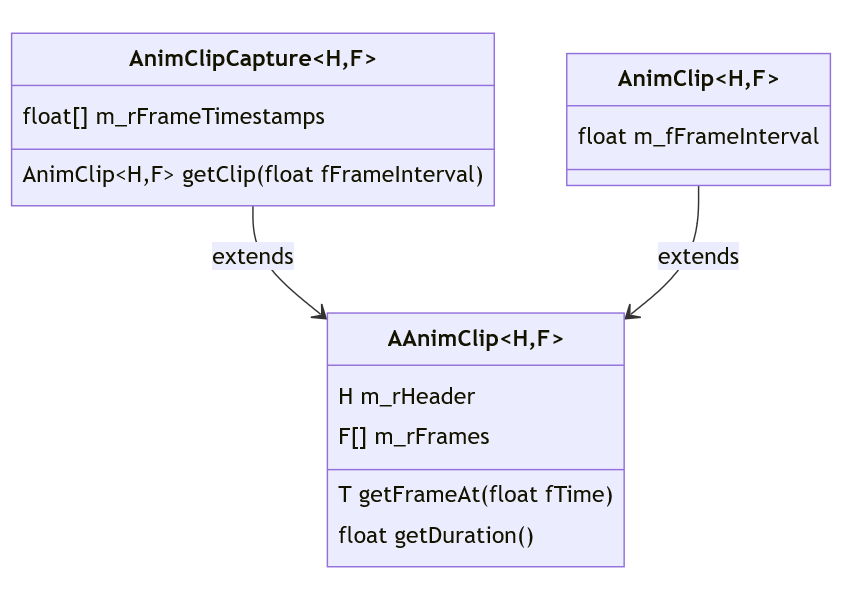

I defined an `AnimClipCapture` with timestamps for each frame, and an `AnimClip` with a fixed frame interval. They both contain some header data, and some frames. So it's natural to define a very simple class hierarchy like this:

I added to `AnimClipCapture` a method to calculate an `AnimClip` from it, by creating frames at a desired, specific time interval, using interpolation on the timestamped frame data.

To keep things a little generic, I defined a new simple interface, `IInterpolable<T>`, and put a `where` clause forcing any `F` type to implement it.

namespace BinaryCharm.Interfaces {

public interface IInterpolable<T> {

T Lerp(T endValue, float f01);

}

}Then, I implemented it in my `PosRot` type:

public PosRot Lerp(PosRot endValue, float t) {

Vector3 pos = Vector3.Lerp(m_vPos, endValue.m_vPos, t);

Quaternion rot = Quaternion.Slerp(m_qRot, endValue.m_qRot, t);

return new PosRot(pos, rot);

}I defined a "frame data" type for the upper body joints and implemented the interface there too:

public class UpperBodyFrameData :

ICopyFrom<UpperBodyFrameData>,

IInterpolable<UpperBodyFrameData> {

private PosRot[] m_rBodyJointsPoses;

//[...]

public UpperBodyFrameData Lerp(UpperBodyFrameData endValue, float t) {

PosRot[] rLerpJointsPoses = new PosRot[m_rBodyJointsPoses.Length];

for (int i = 0; i < m_rBodyJointsPoses.Length; ++i) {

rLerpJointsPoses[i] = m_rBodyJointsPoses[i].Lerp(

endValue.m_rBodyJointsPoses[i], t

);

}

return new UpperBodyFrameData(rLerpJointsPoses);

}

}In the "frame header", instead, I stored the skeleton structure, defining the parent joint for each joint. I could skip this and assume that the stored skeleton matches the skeleton at runtime, but it might be useful at some point to have different skeletons for different clips, so I'm gonna store it. But I will assume the skeleton stays the same for the whole clip, so I'm going to store it only once, in the header, and not in the frame data.

public class UpperBodyHeaderData : ICopyFrom<UpperBodyHeaderData> {

private SkeletonMapping m_rSkeletonMapping;

public UpperBodyHeaderData(SkeletonMapping rSkeletonMapping) {

m_rSkeletonMapping = rSkeletonMapping;

}

public void CopyFrom(UpperBodyHeaderData src) {

m_rSkeletonMapping.CopyFrom(src.m_rSkeletonMapping);

}

}I also sketched two test behaviours, `UpperBodyClipRecorderBhv` and `UpperBodyClipPlayerBhv`.

They will be able to play a record a clip from the player body tracking data, and to use a recorded clip to animate a suitable skinned mesh.

I'm using some `using alias` directives to make the code less verbose:

using UpperBodyClip = AnimClip<UpperBodyHeaderData, UpperBodyFrameData>;

using UpperBodyClipCapture =

AnimClipCapture<UpperBodyHeaderData, UpperBodyFrameData>;Tomorrow I should be able to start trying things out and see if what I have blindly implemented today and yesterday, without even turning on the headset, works as expected.

2024-03-13 - Clip Recorder

Today I completed the `UpperBodyClipRecorderBhv` implementation I started yesterday.

I also added a bit of keyboard based input to test the functionality, but using a separated behaviour, `UpperBodyClipInputBhv`, to not clutter the recorder code with temporary code.

At some point I will add some kind of UI in VR, so decoupling input handling also helps in validating that the recorder is exposing the required data and methods.

Basically, I press `N` to start recording, and `N` again to stop recording.

Then, I can press `M` to have the latest recorded clip saved to disk.

I added the JSON serialization properties as usual, and saved both the raw capture (with frame timestamps) and a clip derived from it fixing the frame interval at `0.1f` and interpolating as needed.

Of course, saved as JSON a body animation capture serializes to a pretty big file, but I don't care at the moment. I'm storing frames into memory and only writing to disk when pressing `M`, and it's not a functionality that will be in-game, so it's fine if the save locks the game for a moment, while generating and writing the JSON data to mass storage.

A five second "raw" clip is about 3 Mb (600 Kb when saved at 10 fps).

At the current stage of development, it's fine. I already know that, using proper binary serialization and packing, instead of pretty-printed JSON in single text files, these clips won't be a problem at all.

Tomorrow I'm going to implement the playback and find out if the data I'm saving to disk is gibberish.

2024-03-14 - Clip Player

Today I did clip loading and playback, aiming to visualize the data saved yesterday.

The implementation was pretty straightforward.

I keep track of the current playback time, and fetch from the clip a frame at that time offset.

I use that frame data to drive a `PlayerAvatarDebugGizmos`, as done last week for the game state history.

The only interesting detail is that the method I added to the clip to fetch a frame at a specific timestamp uses interpolation. This way, the playback is smooth even if the source clip is low frequency.

public T getFrameAt(float fTS) {

int iIdx = Mathf.FloorToInt(fTS / m_fFrameInterval);

int iIdxBefore = Mathf.Clamp(iIdx, 0, m_rFrames.Length - 1);

int iIdxAfter = Mathf.Clamp(iIdx + 1, 0, m_rFrames.Length - 1);

float fTimeBefore = iIdxBefore * m_fFrameInterval;

float fTimeAfter = iIdxAfter * m_fFrameInterval;

float fLerp01 = Mathf.InverseLerp(fTimeBefore, fTimeAfter, fTS);

T ret = m_rFrames[iIdxBefore].Lerp(m_rFrames[iIdxAfter], fLerp01);

return ret;

}This approach will allow to playback clip with all kinds of effects (for example, slow motion), and to keep the amount of stored data reasonable. I could save clips at 12 fps and playback them at 120. And if a specific clip needs a more fine grained capture, I can save it at, for example, 60 fps, and it will play at the correct speed without touching the playback.

Basically, recording and playback speeds are decoupled thanks to interpolation and to the way I decided to store clips.

I did a quick test using AirLink and it works, even if I need to check some corner cases (like, what happens if a single hand is tracked?) and do a bit of UI work if I want to test in standalone mode, as I'm activating recording/playback with the keyboard.

Too late to capture a video, maybe tomorrow?

2024-03-15 - UI groundwork

In the past days, I've saved and loaded a body clip to/from a hardcoded path. And I can start/stop recording and playback using some keyboard keys only.

I want to be able to capture and edit clips comfortably in VR, so I'm going to develop an UI for that.

I've noticed in my social media feeds some new UI samples from the Meta SDK v62 that looked interesting, which gives me some motivation to update the SDK version used by my project, which (usually) doesn't hurt.

The update and test build of the project took more time that I would have wanted, but at least nothing broke.

I also managed to try the new samples.

I won't be able to do the UI for the "motion capture" manager today, but I can do some groundwork that will facilitate that tomorrow. Yep, tomorrow: I decided to make it up for the "short days" of this week by working on Saturday too, full time.

I copied to my scene the interactive canvas featured in `RayCanvasFlat` example scene in the SDK.

I had to add a `HandRayInteractor` in my camera rig, and a `PointableCanvasModule` script on the `EventSystem`, and that was it, if I remember correctly.

Then, I imported in my project some basic components and scripts I developed in the past, including a slider extension (particularly suitable to be used to scrub into a recording) and a spinner to signal an indefinite wait.

I'm going to use spinner when recording, and the slider when playing.

I added these components to the canvas and tested that they were properly interactable in VR using the `HandRayInteractor`: previously, I had only used them in screen space, interacting with the mouse.

Tomorrow I'll modify the test canvas so that it actually features the controls needed to record and playback a clip. Then, I will bind the UI to the player and recorder built in the past days, replacing the test keys I've been using so far.

2024-03-16 - Motion Capture UI

Ok, finally time to put the pieces together!

Let's pin-point the features I want to implement in the UI:

start/stop recording a clip

visualize a spinner while recording, with the duration of the recording so far

start playing a recorded clip

pause the playback

resume playing a paused clip

stop the playback (the clip rewinds to the starting point)

visualize the playback progress through a slider

being able to scrub into a clip dragging the progress slider

This is going to need a few elements laid out on our VR-ready canvas:

a label indicating the current state, useful to check that everything works correctly

a label indicating the time (recording duration, or playback progress)

a draggable slider, visible only during playback

showing the playback progress and allowing to seek into the clip

a spinner, visible only during recording

taking the place of the slider, but for an indefinite duration

buttons: play/pause/stop/rec

For today I'm going to keep it simple and handle a single clip slot: if the slot contains a clip, you can play it, otherwise you must record one, and it will be placed in the slot.

When you have a clip in the slot but decide to record, the clip will be overwritten.

I laid out the widgets in a somewhat reasonable manner that doesn't hurt my eyes too much, and then added a bit of logic to make the UI behave in a sensible and robust way, binding it to the player and recorder (which required just a few edits here and there).

It's barebones, but does the job!

Here's a short video of me testing in editor using Airlink (sorry for the sluggish capture).

There's plenty to do, next week, before moving on to the next feature:

It must be possible to "clean up" clips by cutting from the beginning and from the end of the recording

It must be possible to record multiple clips, and to select which one to reproduce

Ideally, it would be nice being able to define a clip name directly when recording in VR, using a virtual keyboard

The playback speed should be configurable

It might be nice to have a flag to set if the playback must loop or not

It might be nice to have a flag to set if the playback should "flip handedness" of the clip

The test visualization I'm using to visualize the recording is based on `

DebugGizmos`, which only works in the Unity editor. I must do the same but with a skinned mesh, like the one used by the player character.

Hopefully I'll be able to do all this, and more, next week.