Coding conventions and Unity best practices

With a fair bit on the worst practices too

The code for the first 16 days of "game-jammy" prototyping is not something to show to the world, but in the upcoming entries, where I'll start doing things properly (at least, at the best of my capabilities, and for my taste), I'll be sharing some snippet here and there.

Hopefully it will be useful to somebody, and to make it more useful I decided that I should write a post about the coding conventions that I'm using for the project.

There's no ultimately better coding convention, or everybody would use it and shut up, while instead there's plenty of debate and strong opinions. There are many conventions which are appropriate for different languages, contexts and domains, and some cosmetic differences that don't really matter and are just a matter of personal taste.

At least, the thing pretty much everybody agrees on is that it is useful to stick to a specific set of coding conventions for a project, especially one involving multiple developers. Which set gets chosen doesn't matter that much at the end of the day.

I'm solo programming, so I don't need to make anybody else happy or convince them to use the style I prefer. And I definitely don't want to convince you, even if I'm going to provide my rationale about the style I use.

Moreover, I'm going to discuss things specifically related to Unity, which go beyond C# coding style.

Conveying meaning

To me, programming is about conveying meaningful information to both the compiler and the reader of the code (including "future me").

It's also about organizing and structuring thoughts, building and composing abstractions in sensible, effective ways.

And sometimes, is forgetting about all that and writing instead code that runs fast, thinking about the characteristics of the hardware is going to execute it.

On a lucky day, one manages to write code that does all these things at the same time. Mostly, is a continuous balancing act between these different angles. It never gets boring!

I try to convey meaning through code in any way I can: naming, spacing/indentation, custom data types, selection and ordering of parameters etc.

Focusing on a specific context

As it often happens, if you try to find a generic solution that works in every possible context, things get complicated (and cumbersome).

So, I decide instead to keep it simple and focus on the specific context for the project:

3D game development using C#/Unity

This tells us three important things:

3D game development: we're going to deal with vectors, matrices and quaternions on a daily basis: these are core types of the domain we're working in.

C#: some of our conventions will be related to intrinsic characteristics of that language, and we might check its standard coding conventions

Unity: we're going to interface our code with the Unity APIs, so we might to want it to fit nicely with their style - or we might, for a twist, use different conventions so that we can easily distinguish between Unity API calls and our own

When I write code in other languages and for other contexts, I follow different conventions. And nothing is set in stone: these rules should be a useful tool, not a burden. Of course, that changes when working with a team: in that case, it's more important to be consistent to not confuse colleagues.

Comments

Code should be well readable and speak for itself. Comments should be a fall back option for cases when, for some reason, it's not possible to write obvious, self-explanatory code.

So, I try to avoid writing comments. Every time you are about to write a comment, you should check if you can instead spend that time to refactor the code so that it wouldn't need it (for example, renaming some parameters, or wrapping related values into a struct).

My position is that comments for obvious code not only don't increase quality, they decrease it. They add noise: text that takes space on your screen and that you end up reading, but that doesn't give you any new useful information.

There's an exception I make. If I'm writing a library that must be used by third parties, especially if source code won't be shared, it's mandatory to provide detailed documentation to end users.

In that case, adding documentation comments to automatically generate documentation is the lesser evil. It still feels very stupid to document obvious things, but providing a library with an API that is not fully documented feels too lame.

Formatting

This is what I consider the silliest part of coding conventions, the one that matters less.

Anyway, I have my preferences:

I use "Egyptian" parenthesis

esthetical preference, with the bonus advantage of having extra lines of code on-screen

I don't skip braces for "single statement" blocks

esthetical preference, with the bonus advantage that makes insertion of further statements safer

sometimes, I misbehave and put on a single line a very simple conditional statement without braces, like

if (iNumber % 2 == 0) ++iEvenCnt;

I indent pressing

TAB, but have my IDE configured to use 4 spaces per indentation levelwasting bytes using four characters and not one doesn't bother me at all (it's a problem from another era), while I think there's a concrete advantage in having consistent indentation when opening files with different editors that could visualize TABs differently

I usually never go past 100 columns and prefer to stay under 80. For this project, I'm going to limit myself to 79 columns, because that's where the code in the Substack code block wraps when viewed in the browser.

having an "old school" columns limit helps me to keep things more readable: parameters for non trivial calls go on multiple lines, and no superfluous indentation levels are used to not waste horizontal space

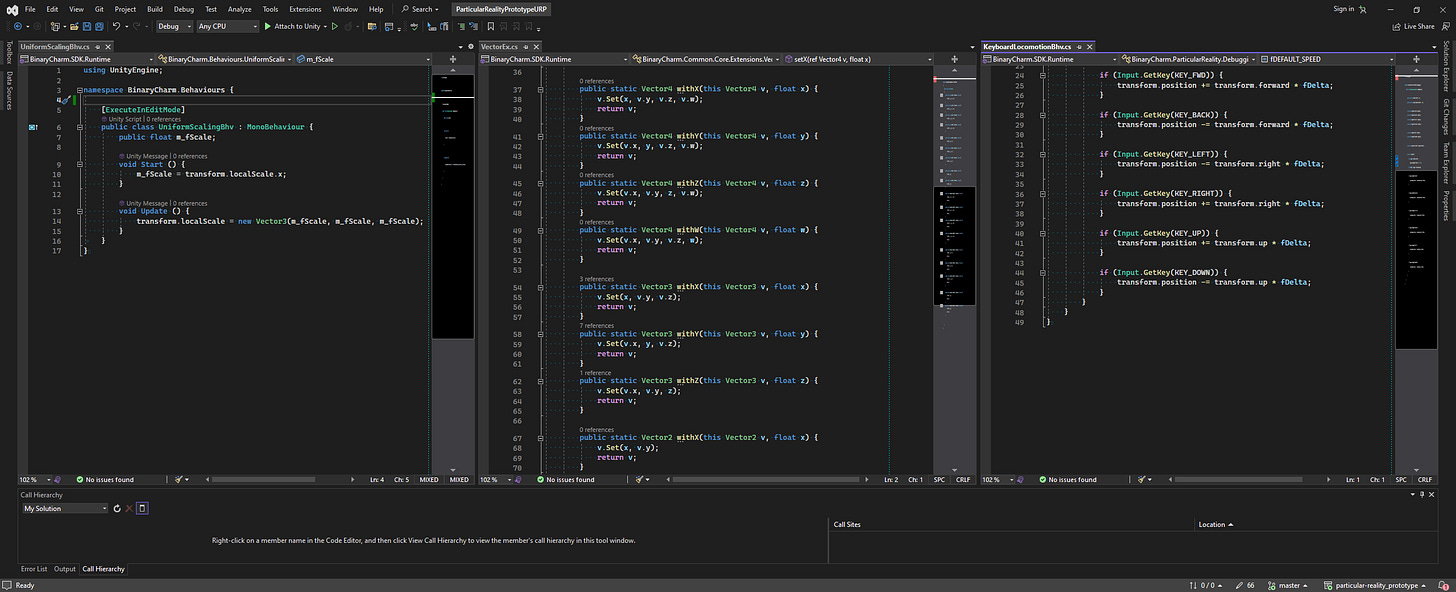

additionally, this setup allows me to have three files opened side by side on the 21:9 monitor I normally use (and at a text size that should not make me go blind)

Naming

Naming things properly is probably the most critical thing in making the code readable.

I use a simplified and customized variant of the Hungarian notation.

Still here? Some developers run away as soon as they read "Hungarian notation", usually screaming "WHAT ARE YOU DOING, IT'S NOT THE NINETIES, THE IDE HIGHLIGHTS THINGS".

Oh, whatever. I find it very useful.

Additionally, you're not always using an IDE when reading code. For example, here reading this devlog, you don't get advanced syntax highlighting or code navigation.

Actually, unfortunately, you don't even get basic syntax highlighting (come on, Substack!)

Let's see a small code snippet and discuss some details.

using UnityEngine;

public class SubThing {

public readonly Vector3 m_vStartPos;

public readonly Quaternion m_qStartRot;

public SubThing(Vector3 vStartPos, Quaternion qStartRot) {

m_vStartPos = vStartPos;

m_qStartRot = qStartRot;

}

}

public class Thing {

private int m_iSomething;

private float m_fSomethingElse;

public readonly SubThing m_rSomeReferencedThing;

private static long s_iThingCounter = 0;

private const float PI = 3.14159f;

private static readonly Vector3Int[] rHEX_DIRS = new Vector3Int[6] {

new Vector3Int( 1, 0, -1), //NE

new Vector3Int( 0, 1, -1), //N

new Vector3Int(-1, 1, 0), //NW

new Vector3Int(-1, 0, 1), //SW

new Vector3Int( 0, -1, 1), //S

new Vector3Int( 1, -1, 0), //SE

};

public Thing(int iSomething, float fSomethingElse) {

m_iSomething = iSomething;

m_fSomethingElse = fSomethingElse;

m_rSomeReferencedThing = new SubThing(Vector3.up, Quaternion.identity);

s_iThingCounter += 1;

}

public void setSomething(int iSomething) {

m_iSomething = iSomething;

}

}I use the the `m_` prefix for member variables, and the `s_` for static ones.

Consequently, if there's no `m_` or `s_` prefix in a variable name, it means it's local. You instantly know the scope and lifetime of a variable.

Moreover, you can consistently name constructor parameters, without having to mess with `this`.

After the underscore, or at the beginning of a local variable name, there's a letter indicating roughly the data type.

Why roughly? Some more hardcore variants of the Hungarian notation specific exactly each specific type in the variable name, using an abbreviated form (e.g. `lpsz` for long pointer to a zero-terminated string).

I prefer instead to use a single letter, identifying "groups" of types:

`i`: for integral data types (`int`, `uint`, `long`, `ulong`...)`

f` : for floating point data types (`float`, `double`)`

b` : for `bool``

s` : for `string``

v` : for Unity vector data types (`Vector2`, `Vector3`, `Vector4`)`

q` : for Unity quaternions (`Quaternion`)`

e` : for any enumeration data types`

r` : for any reference type

Why I don't distinguish between `int` and `long`, or between `float` and `double`?

While writing an expression, 99% of the times it's useful to instantly know if something is an integer or a floating point, because it can change the result of the expression (integer division vs floating point division). It's often not so critical, instead, to know about the range of values supported by each variables: one should think about that when defining it, and occasionally revise it if needed.

If there's no letter, it means that is a custom type using value semantics (so, a `struct` of some kind). So, you might tell me that vectors and quaternions should go into this category, but they're so frequently used and important that I decided to treat them as first class citizens and have specific letters for them.

You might also argue that strings in C# are reference types and so should use `r`, but they're also somewhat "special" and deserve a letter of their own. Moreover, I'm more focused on semantics than representation, and C# strings equality operators are defined to compare the values they contain and not the references (as you get by default with custom reference types).

So, we have a `m_` or `s_` prefix, or no prefix. Then, a letter which directly improves the deduction of the semantics attached to a variable (how is it compared, how is it passed, what happens if you do logical or arithmetical operations on it?). Camel-case for the rest of the variable name.

Sometimes, I also use suffixes, but I'm not particularly strict nor consistent about it: it's about if I feel it's useful in that context. Some examples:

`

Map` - dictionary establishing a mapping between two things`

Arr` - array`

Cnt` - counter`

Idx` - index`

Id` - identifier`

01` - normalized value (`float` in range `0...1`)`

Tr` - Unity `Transform``

GO` - Unity `GameObject`

For `const` and `static readonly` definitions used like constants (but that because of their type cannot be declared as `const`) I use upper case names, using the underscore as divider.

For names of namespaces, classes, structs, interfaces and enumerations I use PascalCase. I use an additional capital letter prefix following this scheme:

* `I` : for interfaces

* `A` : for abstract classes

* `E` : for enumerations

So, no prefix letter for namespaces, structs and classes.

Type names are usually singular. An exception is when using a flags enumeration, which can represent multiple values packed together and so should be plural.

I always use the `Bhv` suffix for classes extending `MonoBehaviour`.

I always specify access level modifiers (`private`, `public`, `protected`...)

I use camelCase for method names. Yes, I know that Unity uses PascalCase, but seeing the two mixed up doesn't bother me. Actually, it helps me to instantly distinguish calls to method I defined.

I do not use C# properties. I don't like having any computation hidden behind the syntax normally associated with simply accessing a field (a basic, usually constant time operation).

I use C# events and not `UnityEvent`. I follow the common convention of using a verb related to the moment the event is raised, and of always using a companion "On" method to raise it in a controlled way. Short snippet:

public class EventSender {

public event Action<Something> Activating; // raised before activation

public event Action Activated; // raised after activation

private Something m_rSomething;

protected virtual void OnActivating(Something rSomething) {

if (Activating != null) Activating(rSomething);

}

protected virtual void OnActivated() {

if (Activated != null) Activated();

}

private void activateSomething() {

OnActivating(m_rSomething);

//m_rSomething.activateOperations();

OnActivated();

}

}I often use `Action` / `Func` and rarely define custom delegate types.

Unity best (and worst) practices

I continuously iterate in trying to find better and better structure for not only code, but scenes. That said, I came to pretty strong conclusions about some core principles that I stick to as I have experienced that they help me keep things manageable.

The typical "first steps" Unity tutorials, as soon as you have two scripts on different nodes and you need to put them in communication, tell you to add a public field to link them together via inspector.

Basically, if you have `ScriptA` on `NodeA` and `ScriptB` on `NodeB`, and you want to call from `ScriptB` a public method `SomeMethod` defined in `ScriptA`, they tell you to do this:

// ScriptA.cs

public class ScriptA {

public void SomeMethod() {

// do things

}

}// ScriptB.cs

public class ScriptB {

public ScriptA onNodeA;

void Update() {

if (Input.GetKeyDown(KeyCode.SPACE)) {

onNodeA.SomeMethod();

}

}

}The `onNodeA` definition in `ScriptB` is shown in the inspector of `NodeB` as a field into which you can drag any node with `ScriptA` attached to it. That action basically puts in the scene data some information that Unity, at runtime, will deserialize and use to fill in the `onNodeA` variable with a reference to the `ScriptA` instance on `NodeA`.

So, one can start linking nodes between any linked corner of the scenes and have each one call methods on others.

It might sound fine and it can work for a very simple demo or for the scope of a tutorial, but it's a recipe for disaster.

The problem is that there is no enforcement of any structure on what node refers to what other node. You might end up linking together components from nodes in totally different subtrees of the scene hierarchy. And if you want to make things worse, you just have to do it at runtime, using methods like `GameObject.Find("nodeName")` or `GetComponent()`.

If you want to make your project completely unmanageable, you just have to add to the mix the use of unity events , defining via inspector what method gets called when a certain event occurs.

If you had any hope to figure out how something worked, by following references and calls in the code, now you lost it, because there's nothing to see or find in the C# side of things: good luck untangling the mess by checking the settings of each node through the inspector.

Ok, I think I have described the worst thing I've encountered when dealing with third parties projects and tutorials.

This is a good moment as any to remember that the value that a system provides to an user is given by both what it enables them to do (flexibility, power) but also by what it prevents them to do.

Unity gives you plenty of flexibility, but doesn't prevent you making a total mess of your project, and actually, unfortunately, sometimes encourages you to do it while attempting to "make things simple" (see: events wiring via inspector).

That said, something that gives you power and flexibility can still be very useful if you restrict yourself and use discipline to keep things manageable.

It's a bit like with C++, which provides incredible flexibility (at the cost of huge complexity). You can make great use of it if you use a very limited subset of features and follow sensible guidelines.

But then again, in time it appears valuable the other side of the coin, the value that a system can provide by imposing restrictions that help you stay on the good path. And that, I guess, is part of the reasons why Rust is gaining traction and usage, and is often suggested, even by some C++ experts with decades of experience, as a viable (if not better) option for new projects.

So, how do I keep things manageable in Unity?

First, I make a very controlled usage of `MonoBehaviour`.

The data and logic of projects stays handled in "normal" C# classes and structs, structured like I would do in any other engine.

A script extending `MonoBehaviour` attached to a node is, for me, something that enables me to interact with that node, its components, or (with some limits) its subtree. If, for example, I have a cube object with a rigid body and a renderer, and I need to manipulate these components, I create a `CubeBhv` script and attach it to the cube.

In that `CubeBhv`, I access the `Rigidbody` or `MeshRenderer` components, and expose method to indirectly manipulate them. For example, I might do:

public class CubeBhv {

[SerializeField] private MeshRenderer m_rMeshRenderer;

[SerializeField] private Rigidbody m_rRigidBody;

public void setHighlighted(bool bVal) {

m_rMeshRenderer.material.color = bVal ? Color.red : Color.white;

}

//[...]

}In other parts of the code, I will have a reference to the `CubeBhv` on the node, and will call `setHighlighted`, but I will never directly access the `MeshRenderer` or the `Rigidbody`: `CubeBhv` is my unique interface to the manipulation of that `GameObject`, and abstracts the low level details about what components are accessed in doing so.

Also, note that I never use `public` fields for references to components. They should only be accessible from inside the behaviour itself. The correct way to make them editable through the inspector is marking the field with the `SerializeField` attribute.

This principle of isolating the access to components can be extended to a whole hierarchy of nodes. Let's see an example hierarchy:

A

|

--- B

| |

| --- C

|

|

--- X

|

--- YIf B refers to C, and A refers to B, it's all fine. If A manipulates a component of C, it must do it indirectly, using B. Bypassing B and directly linking A to C is prohibited.

If there is no link between A and X, instead, it's fine for A to link directly to Y.

Linking between nodes in different subtrees (let's see, from B to Y) is prohibited.

If we need to model an interaction between nodes in different subtrees, their interaction should be handled by a common ancestor. In this example, only A would be able to have B and Y interact with each other.

By following this strict hierarchical organization, one avoids bad surprises. If something unexpected happens on a component of a node, there's a very limited subset of scripts that could be responsible.

Also, it's a type of organization that scales pretty easily.

One can instantiate a prefab and only access it through a single script attached to its root node. That script will deal with the prefab subtree, and every time things get too complicated for a single script, you can break down into more manageable parts by adding more child nodes/behaviours.

The same kind of approach can also be used with secondary scenes loaded additively, with a few quirks.

That said, there is in practice some space for "global" things accessed ignoring this organization, for example by using singletons. Singletons are considered an enemy of clean code architecture, and for very good reasons.

However, especially considering some of the Unity quirks, there are practical reasons to use a singleton here and there.

It's not like there are no better options, but they are usually more complicated and so they must be worth the increase in complexity.

For example, let's consider some debug materials that I use all the time. I can add this `ResourcesManagerBhv` script on a node, and link there the materials via inspector.

using UnityEngine;

using BinaryCharm.Behaviours;

namespace BinaryCharm.ParticularReality.Behaviours {

public class ResourcesManagerBhv : ManagerBhv<ResourcesManagerBhv> {

[SerializeField] public Material m_rDebugRedMat;

[SerializeField] public Material m_rDebugBlueMat;

private void Awake() {

registerInstance(this);

}

}

}

Then, to assign the red debug material, I can easily do:

GetComponent<MeshRenderer>().material = ResourcesManagerBhv.i().m_rDebugRedMat;In the 16 days PoC, I went for the easy road and used (and abused) singletons, creating a bunch of "manager" classes that I could have working together as needed.

It was simple enough that I could pull it off, but now that we start doing things properly we're going to steer away from this simple approach. and progressively improve upon it.

That said, I refuse any dogma and encourage everybody to do the same.

Sometimes, even a singleton can be ok, like a `goto` statement. Just be careful to the velociraptors.

Be wary of event based programming

The use of events and event handlers is a very common approach that is valuable because it allows to design and develop decoupled subsystems.

A subsystem A listens for events from subsystem B, does its thing when an event occurs, and then maybe sends some events itself.

Compared to a method call from B to A, there's the great advantage that A and B don't have to know much of each other. They only, to put it simply, send and receive messages according to some known description.

This is good, but it comes at a price. Not knowing much of each other often means being unable to inquire about the state of each other, and it often happens that the actions one system must do when an event occurs are not only determined by the data carried by the event itself, but from other factors. So it can happen that one is forced to build a state based on the influx of events, and sometimes replicate logic and state that are already present elsewhere. With the possibility of introducing subtle mistakes.

Other problems often surface. For example, the order in which the event handlers are called can often change the behaviour of the system, and that order is not explicit in the code. Debugging also becomes harder.

If you take a reasonably complicated system, you get to a point where all kinds of events can occur at any time and in any order, and things can become unmanageable.

Ever had a right click menu in Windows that stays open when it should have closed, just because a mouse event got lost along the way? And what about the torch in Android? Did you ever notice any strange interaction between the state of the torch and the use of the camera? It certainly happened to me that I turned on the torch, then launched the camera app, which turned off the torch - but after that the torch toggle was still on, as if the torch was enabled. And to turn it on again while using the camera, I had to press the toggle twice. My friends, that screams to me "event based programming gone wrong".

So, shall we ban event based programming? Of course not, but we should only use it when it's really needed and it's critical to obtain a reusable, loosely coupled subsystem.

More often than not, it's very possible to manage things in a more robust, simple and also efficient manner if one manages to use other approaches.

There's always time to make things more complicated and decoupled later if the need arise, but it's better to try and keep things simple first.

Simple like what? Well, subsystem A changes a shared state. B reads it and does it thing, maybe changing another piece of shared state that A will read in the next frame.

Also, a bit of polling, paired with dirty flags or with lastUpdated timestamps can help in keeping things simple and faster than you might imagine (profile, always profile!).

Asynchronous programming

This would require an article by itself and this post is already too long.

So, I will just say, in the context of Unity: I do not use coroutines at all. Instead, I use the `async/await` through `UniTask` when it makes sense. Which is: almost never.

It's fine to use `async/await` for things like background loading of resources, or network calls to a REST API. Having tasks and futures is definitely much better than chaining a bunch of callbacks which themselves do non blocking API calls (callbacks bring problems similar to those described discussing event based programming).

But the moment you use an asynchronous call in gameplay code, you're asking for troubles, because basic things like pausing the game become a mess.

Every time I tried doing it, I ended up regretting it and switching to a FSM based approach.

If you are using an API or a third party component that offers you asynchronous operations, and you want or need to hide that from the rest of your system, you can (and often, should) implement a FSM that wraps those calls and only exposes what's needed.

The idea of the "game loop" where there's a simulated game world, with a state that can be affected by user input, and a renderer that produces a frame based on that state, is particularly well suited to an implementation involving FSMs.

So, who's up for a t-shirt saying "When in doubt, use a FSM"?

Is that it?

I realize that I might have forgot about important things, and that at some point I might change my mind about those that I didn’t forget. I might update this “special” post in the future.

Anyway, that’s it for now, and next week you’re going to get the first post about the first week of “post PoC” development: that’s enough rambling, there’s a game to be made!